AIGC Advertisement

This project explores the intersection of AI and content creation, focusing on designing intuitive interfaces and workflows for generating, editing, and managing AI-generated content. By streamlining the AIGC workflow for advertisement production, it enhances efficiency, enabling seamless user experiences for creators and businesses.

Content Strategy

Blender, Adobe Suite

Problem Statement

Challenge

.Monks seeks to reduce the time and cost of advertisement production while integrating AI solutions into the firm’s existing advertising and marketing workflow. Traditional methods are resource-intensive, while existing AI tools lack intuitive interfaces, making adoption difficult across teams.

Goal

Develop an AI-assisted workflow that streamlines production, reduces costs, and is easy to use firm-wide, allowing graphic designers to adopt it quickly with minimal training. The project will focus on building the Comfy UI model within one month for the Under Armour campaign, ensuring it is scalable and adaptable for future campaigns.

Research & Insights

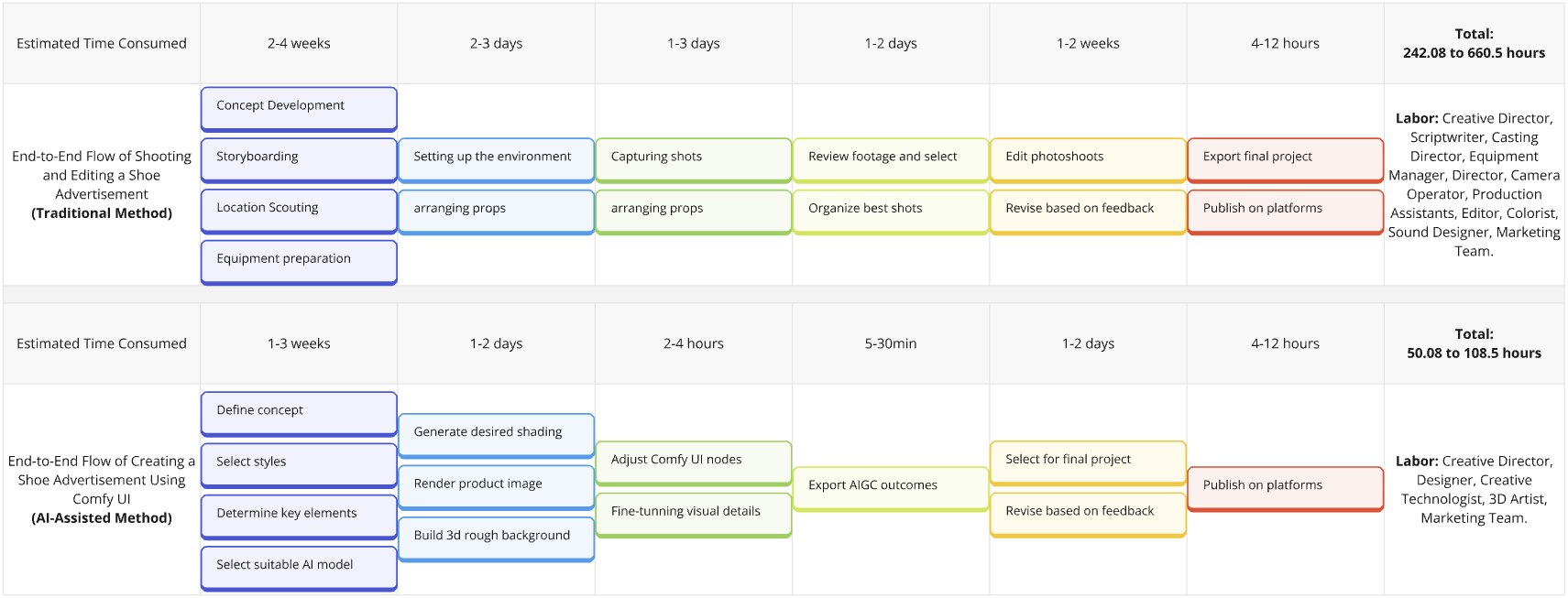

Comparative Workflow Analysis:

The AI-assisted method accelerates production, reduces labor costs, and enhances creative flexibility, making it a scalable solution for future advertisement campaigns.

User Research for Comfy UI:

Conducted interviews and surveys with key stakeholders, including the marketing team, project managers, and graphic designers. The research aimed to identify pain points in existing processes, assess AI adoption challenges, and determine key requirements for an AI-assisted workflow.

Key Findings:

1. Enhance eCommerce visuals by moving beyond traditional white background-only imagery.

2. AI-generated ads require accurate product representation with consistent branding.

3. Integrate Post-Production VFX to augment photoshoot creativity, with a focus on high-impact creative close-up shots that elevate product presentation.

Process

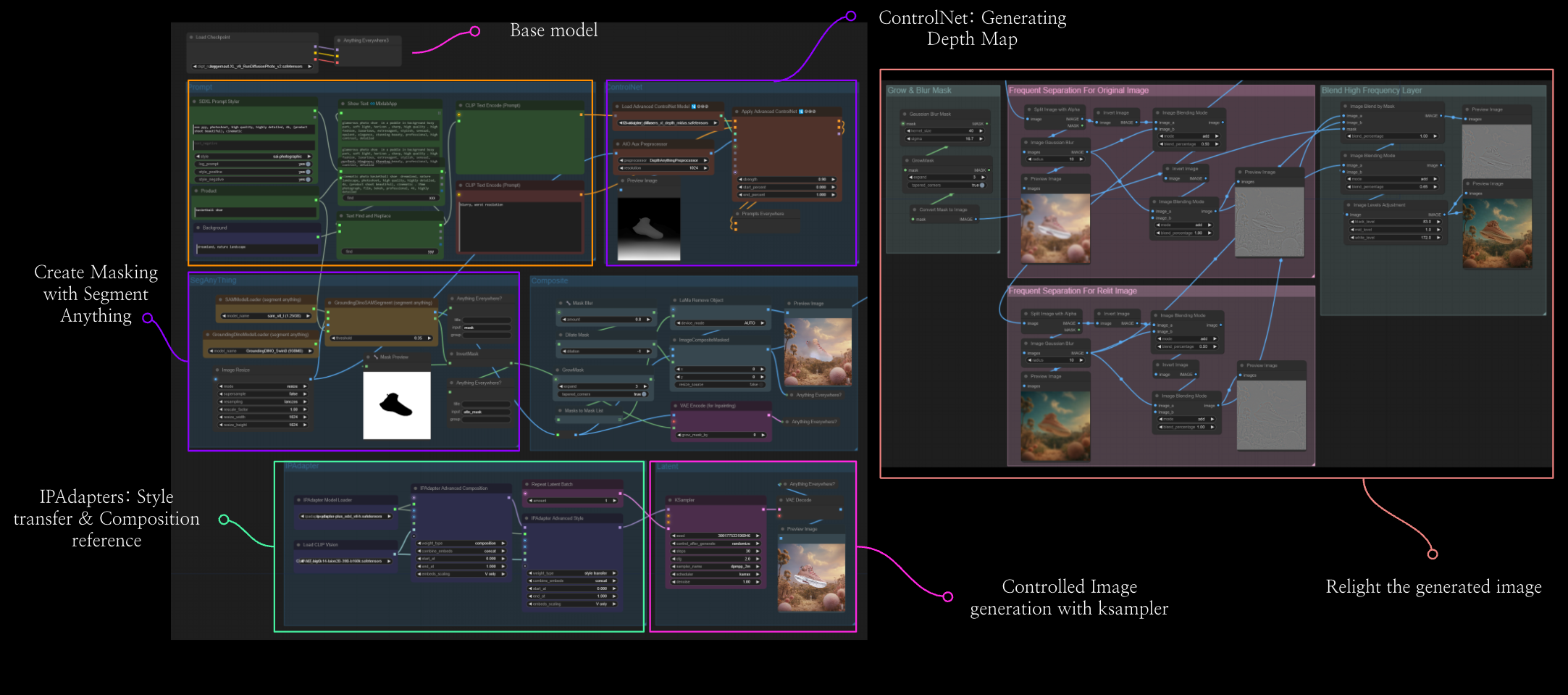

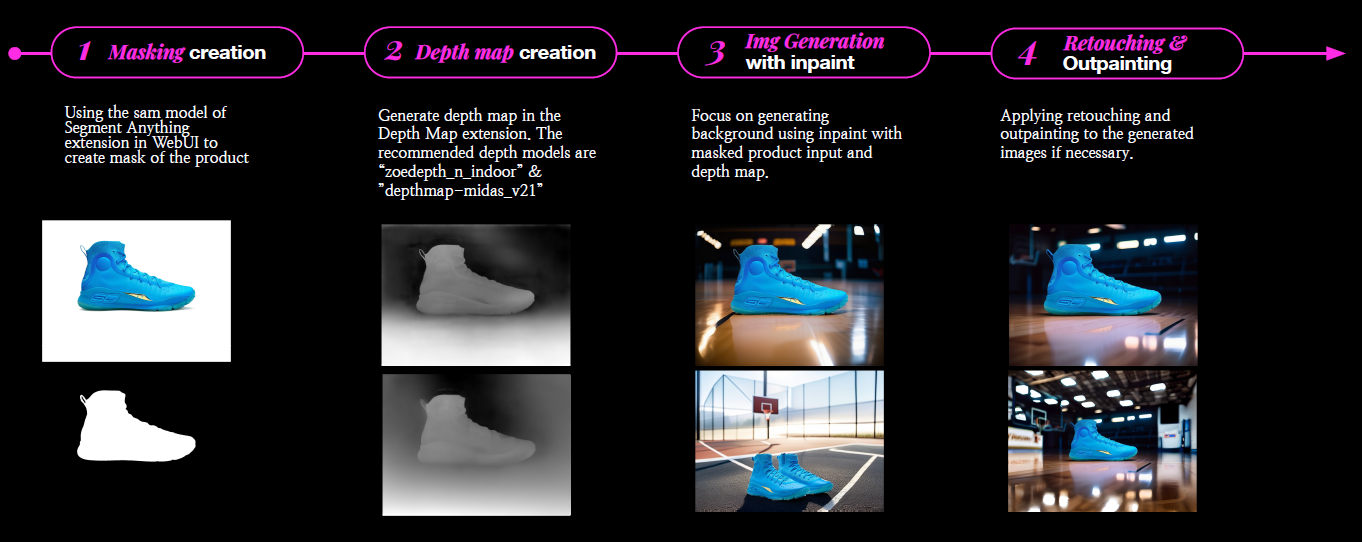

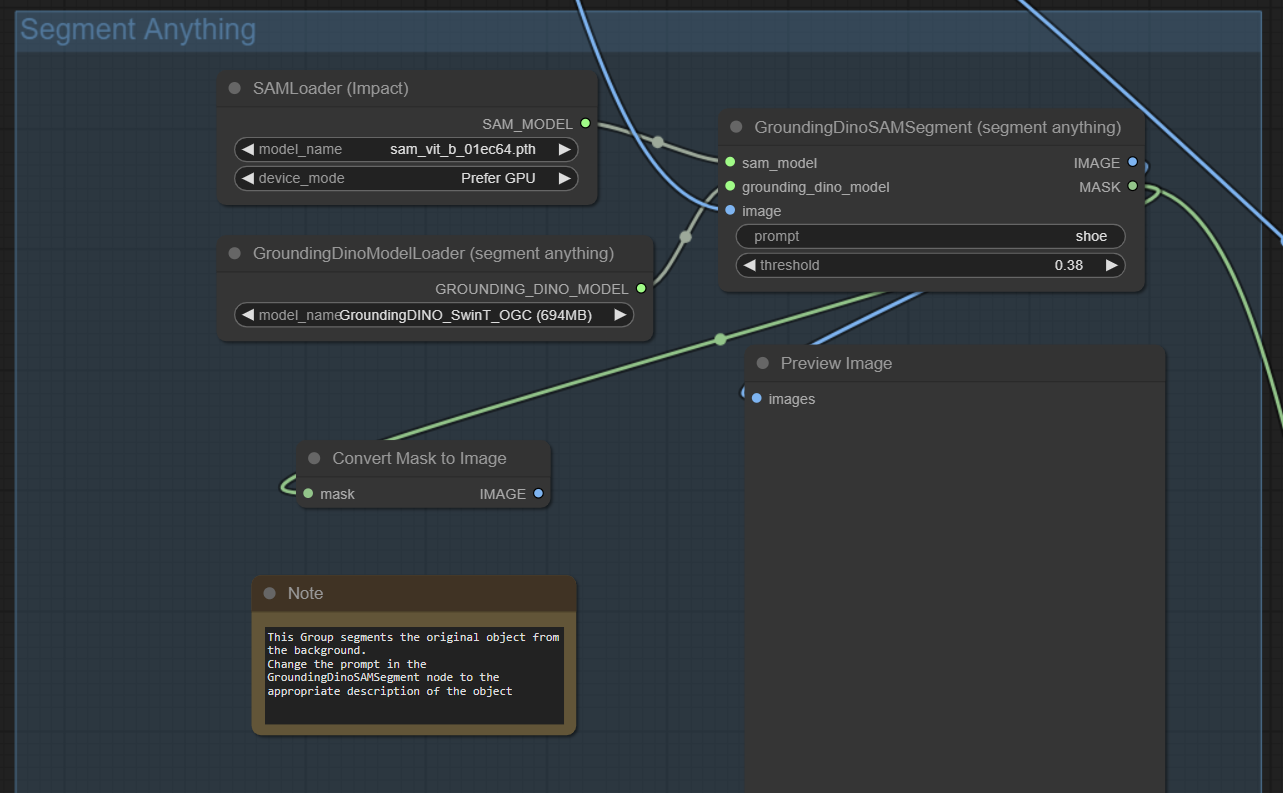

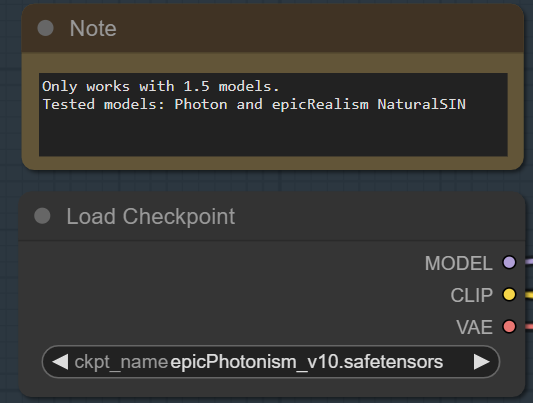

For the Under Armour shoe campaign, we developed and tested three distinct ComfyUI workflows by integrating advanced tools like Blender, Stable Diffusion, and Large Language Models (LLMs). These workflows were designed to streamline the creative process and deliver high-quality, visually compelling advertisements. The workflow primarily focuses on generating a background around the masked product using inpainting and depth mapping.

Challenge

While effective, it does not fully integrate the product into complex environments. The outputs also lack proper lighting and contextual alignment.

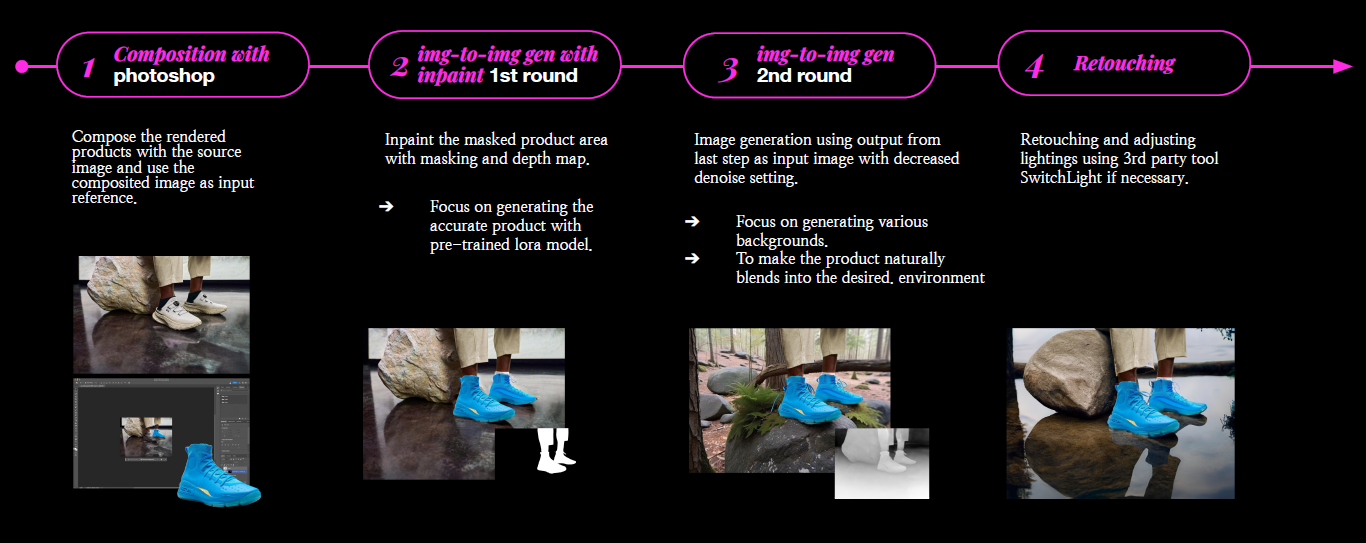

Improvement

In order to ensure the product integrates seamlessly with lighting and depth variations, two-stage inpainting and img-to-img process are added into the workflow. The first round refines the product's appearance, while the second round generates different contextual backgrounds.

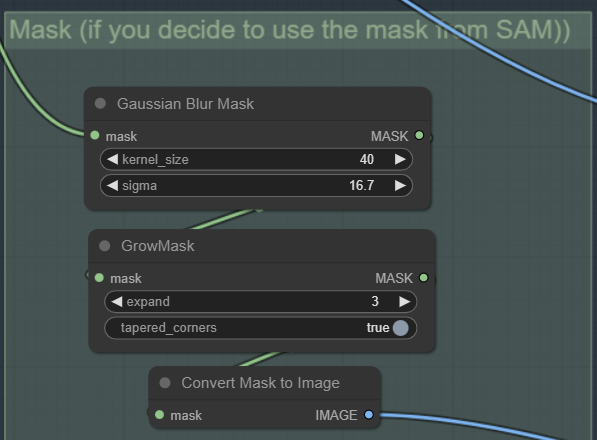

Essential Nodes Research

The Segment Anything node is crucial for accurately isolating objects, allowing precise masking for inpainting or background modifications. The mask processing step enhances the quality of the segmentation by smoothing edges, expanding coverage, and refining details, ensuring better blending in the final output. Lastly, the model checkpoint loading is essential for selecting the appropriate Stable Diffusion model, which determines the style, realism, and overall quality of the generated image.

Results & Impact

Through this iterative process, we were able to generate a wide variety of shoe visuals and accompanying promotional content, refining the workflows to improve efficiency and output quality. We focused on identifying the Minimum Viable Product (MVP), ensuring that the system produced a visually appealing and consistent result that met Under Armour's brand standards. This MVP was successfully adopted company-wide, marking a significant improvement in how the company approached creative production for marketing campaigns. The integration of AI and automation not only reduced time and labor but also allowed for greater flexibility in generating content, ultimately leading to more dynamic and engaging advertisements.

Store Design